YouTube Introduces Mandatory Labeling for AI-Generated Content Amid Rise of Misinformation

In an era where technology has reached unprecedented heights, the proliferation of AI-generated content has raised significant concerns about misinformation and deception. To address this issue, YouTube, one of the largest video-sharing platforms globally, has announced a groundbreaking initiative: mandatory labeling for AI-generated content, per NBC News. This move aims to enhance transparency and accountability among content creators while empowering users to make informed decisions about the media they consume.

The announcement made by YouTube on Monday (March 18, 2024) outlined the platform's new policy, requiring users to indicate whether their uploaded videos contain altered or synthetic media, including content generated through artificial intelligence. Particularly concerning topics such as health, news, elections, or finance, YouTube will prominently display a label on videos deemed sensitive.

Upon uploading a new video, users will be prompted to confirm whether their content includes any of the following characteristics: making a real person appear to say or do something they didn't, altering footage of real events or places, or generating a realistic-looking scene that didn't occur. If confirmed, YouTube will append a label, "Altered or synthetic content" to the video description, signaling to viewers the presence of manipulated media.

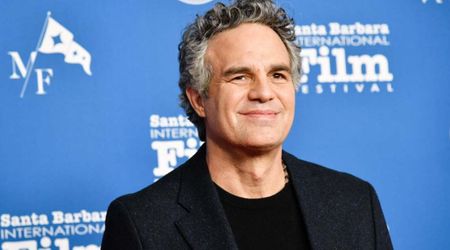

While YouTube stated the immediate availability of this feature, some users experienced discrepancies, with the feature not yet accessible. Nevertheless, this initiative underscores the platform's commitment to combatting the proliferation of AI-generated misinformation, a problem that has plagued online spaces. The prevalence of unlabeled AI-generated content on YouTube, as uncovered by a January NBC News investigation, underscores the urgency of such measures. From fake news disseminated through AI-generated audio narration to manipulated thumbnails featuring celebrities, the platform has become a breeding ground for deceptive content. While not all instances may warrant labeling under the new rules, YouTube's effort marks a significant step toward curbing the spread of misinformation.

Crucially, YouTube clarified that not all forms of AI-assisted content creation would necessitate disclosure. Activities such as utilizing AI for text-to-speech technology or generating scripts and captions would not mandate labeling unless intended to deceive viewers. This nuanced approach aims to balance transparency with creative freedom, recognizing the diverse ways creators leverage AI in their content production processes.

Initially rolling out on mobile platforms, YouTube plans to extend the labeling feature to its desktop browser and YouTube TV in the coming weeks. Additionally, the platform signaled its intent to penalize users who consistently withhold disclosure, emphasizing the importance of accountability in maintaining a trustworthy digital ecosystem. Moreover, YouTube reserves the right to apply labels proactively in cases where content may confuse or mislead viewers.

While YouTube takes strides to address AI-generated misinformation, its parent company, Google, faces similar challenges with consumer AI products like Gemini. Criticized for generating historically inaccurate images depicting non-white individuals in inappropriate contexts, Google has temporarily restricted Gemini's capabilities. These developments highlight the broader industry's struggle to grapple with the ethical implications of AI-driven technologies.

YouTube's decision to implement mandatory labeling for AI-generated content represents a crucial step toward promoting transparency and combating misinformation on its platform. However, the effectiveness of this policy hinges on robust enforcement and continual adaptation to evolving AI capabilities.