What an AI Content Detector Score Really Means

An AI detection score does not prove authorship. The system estimates likelihood based on learned patterns

Dec. 26 2025, Published 3:00 p.m. ET

AI-written content now appears across blogs, academic work, and business writing. Because of this trend, many people rely on an AI content detector score to judge whether their text looks machine-generated. That number often looks precise and authoritative, but its meaning is frequently misunderstood.

Understanding what the score actually represents helps writers avoid panic and unnecessary edits.

An AI Detection Score Represents Probability

An AI detection score does not prove authorship. The system estimates likelihood based on learned patterns. Those patterns come from sentence structure, repetition, and predictability.

Many users treat the score as a final verdict. That reaction causes stress and confusion. The number only reflects how closely the text matches training examples.

An AI detector cannot understand intent or context. Meaning does not exist inside the system. Interpretation stays statistical rather than human.

Why Scores Differ Between Tools

Different detection tools use different training data. Some rely on older samples. Others update their models more often.

Scoring thresholds also vary by platform. One system flags content aggressively. Another system stays conservative. The same paragraph may receive conflicting scores. This difference reflects training design, not dishonesty.

Writing Style Influences Scores Strongly

Simple writing often triggers higher detection scores. Clear explanations appear predictable to algorithms. Structured language resembles common AI output.

Complex phrasing sometimes lowers detection results. That does not make content better. It only changes pattern behavior.

Academic and technical writing face similar challenges. Consistent tone looks mechanical to detectors. Human authors get flagged unfairly.

Text Length Affects Detection Reliability

Short text samples cause unreliable results. A few sentences provide limited pattern data. Scores swing widely with small inputs.

Longer content improves analysis accuracy. More structure gives detection systems stronger signals. A word counter helps manage usable length. Very short drafts should not get scanned.

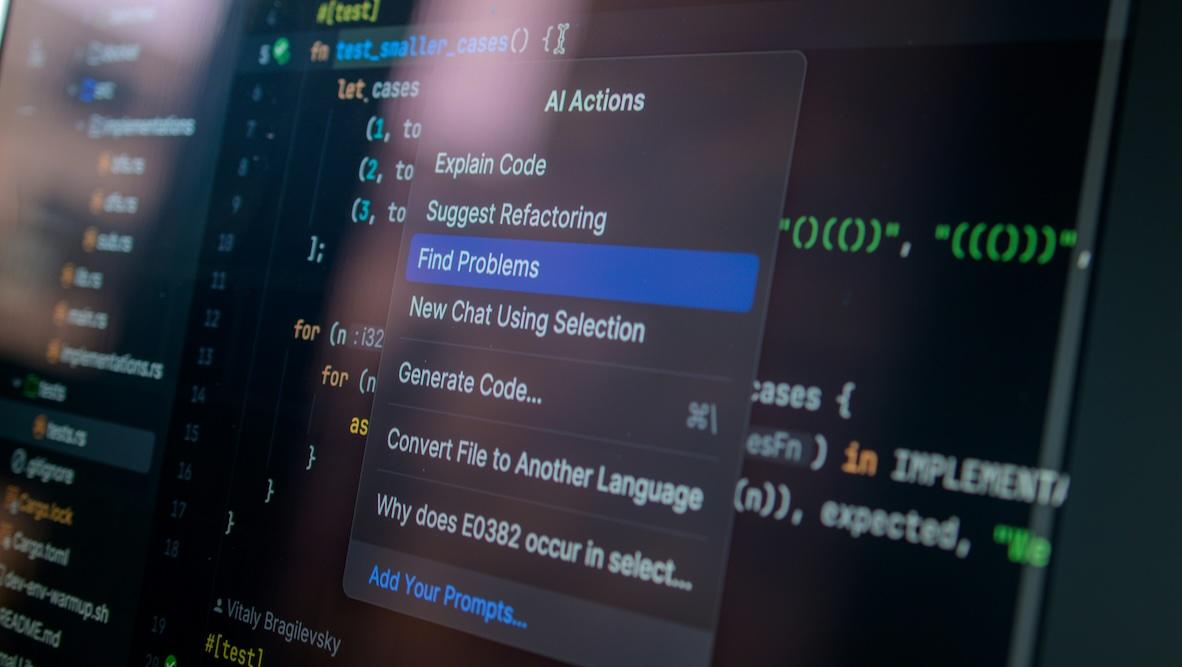

How Editing Tools Change Detection Scores

Editing tools alter text structure quietly. Detection systems respond to those changes.

A paraphrasing tool rewrites surface language while keeping meaning intact. Structural patterns often remain mechanical. Detection models recognize rewrite behavior easily. Scores may remain high or increase.

A summarizer removes examples and background explanations. Shortened text appears compressed. Detection systems associate compression with machine output. A grammar checker removes natural imperfections from writing. Polished structure becomes uniform.

Uniform writing often triggers suspicion. One correction pass usually works better than repeated polishing.

What Highlighted Sections Really Indicate

Some detection tools highlight specific sentences. These highlights matter more than overall percentages.

Repetition frequently appears in flagged sections. Sentence rhythm may remain flat. Transitions can sound forced. Manual editing improves these areas naturally. Reading the text aloud helps spot problems.

Why Chasing Low Scores Damages Writing

Many writers chase zero percent scores. That goal harms clarity and tone. Forced edits break meaning. Ideas lose focus. Writing quality drops quietly.

Readers never see detection scores. People notice flow and understanding instead. Clear writing matters more than numbers.

When Detection Scores Should Matter Less

Creative writing confuses detection systems. Stories and opinions follow different patterns. Scores mislead often in these cases.

Non-native writers face unfair flags. Consistent grammar raises suspicion. Confidence suffers without reason. Context decides relevance. Not every piece of writing needs scanning.

How to Use Scores the Right Way

Detection works best near the final draft stage. Early scanning disrupts thinking. Review highlighted sections calmly. Edit for clarity instead of numbers.

Choose one reference tool only. Multiple detectors create confusion. Treat scores as guidance. Human judgment remains essential.

Final Thoughts

An AI content detector score reflects probability, not truth. Training data shapes every result. Understanding this prevents panic and poor editing choices.

Tools assist the review process. Writers make final decisions. That balance protects content quality and confidence.